tech

All products and services featured are independently chosen by editors. However, Billboard may receive a commission on orders placed through its retail links, and the retailer may receive certain auditable data for accounting purposes.

Trending on Billboard

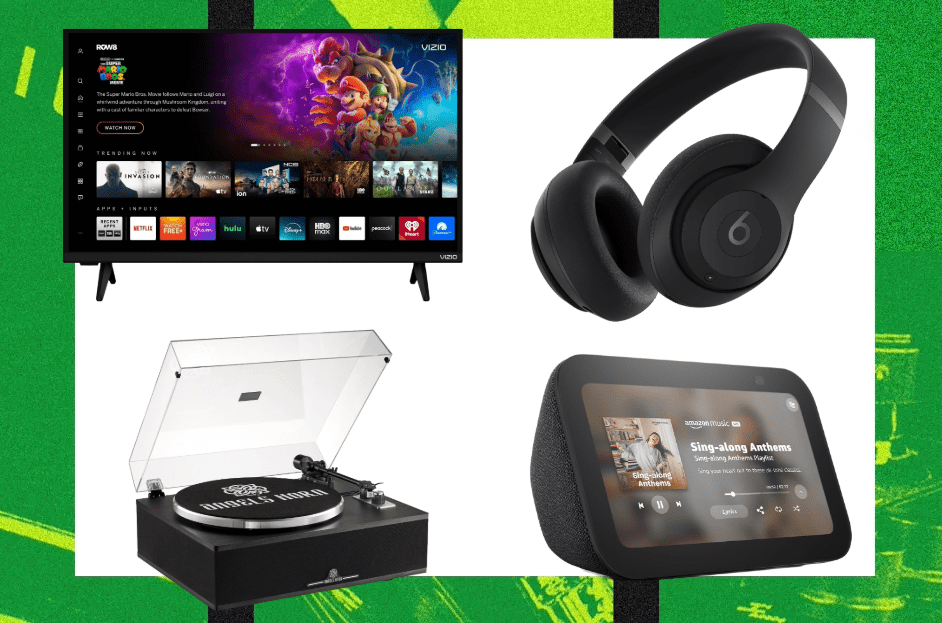

Holiday gifting is in full swing, which can often mean shelling out on expensive electronics for that special someone. Luckily, DoorDash is helping shoppers save big without leaving the couch. The delivery site is offering exclusive deals up to 50% off favorite tech gear including Apple, JBL, Beats and more.

Explore

See latest videos, charts and news

Whether you’re scrambling for last-minute gifts or just want to avoid the holiday crowds, DoorDash makes it easy to get the perfect tech gift delivered straight to your door. Just shop through the DoorDash website or app to access these deals, and have your gifts delivered in hours instead of waiting several days for them to arrive.

Best DoorDash Audio & Gaming Deals

Some stand-out deals we’ve seen from DoorDash include up to 30% off on JBL speakers, a favorite of music-lovers everywhere thanks to its sound quality, from select retailers including Best Buy and Target.

For those looking to get serious about their gaming setup, you can also snag up to 50% off Razer’s BlackShark Gaming Headset from select stores. The BlackShark Headset is equipped with a mic, great for co-op gaming, and closed earcups that fully cover the ears to prevent noise from leaking into the headset, meaning you can focus in on the game and tune out unwanted noise entirely.

Looking for new headphones? DoorDash also has stellar deals on Beats, a brand made for music by a musician. The brand’s Studio Pro Wireless Over-The-Ear Headphones are marked down at Best Buy and Target. The headphones come in multiple colorways, perfect for everyone on your list, and boast incredible audio performance comparable to more expensive models.

Best DoorDash Apple Deals

For loyal Apple fans who are constantly misplacing things, you can get 19% off a pack of four AirTags from select stores including Staples and Best Buy. That’s roughly $16 per AirTag, an impressive markdown when a singular tag can run you up to $30. Pop them in your purse or gift them to the ever-forgetful family member.

{Buy Button – Shop Airtag Deals on DoorDash}

There’s more Apple savings waiting beyond AirTags. DoorDash is running 10% off an 11-inch Apple iPad from select retailers including Target, Best Buy and Staples so you can take your gaming and streaming on-the-go.

Another stellar Apple deal we’ve spotted is AirPod 4 Wireless Earbuds starting at just $120 at select stores. The fourth gen model offers active noise cancelling unlike the previous models, a feature that allows you to focus solely on the music, tuning out the excess noise in the process.

More Perks with DoorDash

If the deals weren’t sweet enough, all eligible customers get their first delivery order via DoorDash free. Want more perks? Members of DoorDash’s DashPass (free for the first 30 days) can take advantage of $0 delivery fees on every order, lower service fees on eligible orders, member-exclusive deals, between five and 10% off on Lyft rides (up to four per month) and 5% back in DoorDash Credits on pickup orders. Plus, annual plan members get HBO Max Basic With Ads included (activated by Dec. 16). If you haven’t thought up a gift, a DashPass is a great place to start, especially for the foodie in your life.

Following your free 30-day trial period, DashPass will run you $9.99 a month or $96 a year (or $4.99 per month or $48 per year with the student discount).

11/25/2025

The best tech and electronics deals include new savings on headphones, earbuds, TVs, turntables and more

Trending on Billboard

Suno and Warner Music Group (WMG) have signed a licensing agreement to forge “a new chapter in music creation,” as Suno CEO Mikey Shulman put it in a company blog post. The deal effectively settles WMG’s part of the $500 million copyright infringement lawsuit against Suno, which it filed alongside UMG and Sony Music last summer. (UMG and Sony Music’s part of the lawsuit is still ongoing).

The deal also includes Suno’s acquisition of the WMG-owned live music discovery platform Songkick, which will continue to run as-is. “The combination of Suno and Songkick will create new potential to deepen the artist-fan connection,” says a press release about the deal.

Related

As Suno’s blog post puts it, the licensing agreement with WMG “introduces new opportunities for artists and songwriters to get paid,” but it does not describe exactly how. It does, however, note that participating is optional for WMG artists and songwriters, who can “opt-in” for the use of their names, images, likenesses, voices and compositions to be used in AI-generated music as they wish. A press release about the deal also notes that it will “compensat[e] and protect artists, songwriters and the wider creative community.”

The blog post also states that the WMG partnership “unlocks a bigger richer Suno experience for you,” including “new, more robust features for creation, opportunities to collaborate and interact with some of the most talented musicians in the world.” It adds that the deal “preserves the magic of Suno and the way you’ve come to love creating with it.”

News of the agreement comes just weeks after Universal Music Group (UMG) forged a licensing deal with Udio, which was also sued by the majors in a near identical lawsuit. That deal resulted in Udio pivoting its service significantly, becoming more of a fan-engagement platform where users could play with UMG copyrights whose rights holders opted into the platform’s “walled garden” environment, rather than one where users can create and download AI-generated songs at the click of a button. WMG followed suit with a similar agreement on Nov. 19.

Related

The press release states that in 2026, Suno will make several changes to the platform, including launching new and improved licensed music-making models, but it is not pivoting away from its core offerings. When that new model rolls out, the release says that the current one will be “deprecated,” given that it is not licensed. “Moving forward, downloading audio will require a paid account. Suno will introduce download restrictions in certain scenarios: specifically in the future, songs made on the free tier will not be downloadable and will instead be playable and shareable,” the release adds. Paid users of Suno will also be limited in the number of downloads they can make each month; to unlock additional downloads, they will have to pay extra fees.

“This landmark pact with Suno is a victory for the creative community that benefits everyone,” said WMG CEO Robert Kyncl in a statement. “With Suno rapidly scaling, both in users and monetization, we’ve seized this opportunity to shape models that expand revenue and deliver new fan experiences. AI becomes pro-artist when it adheres to our principles: committing to licensed models, reflecting the value of music on and off platform, and providing artists and songwriters with an opt-in for the use of their name, image, likeness, voice and compositions in new AI songs.”

Suno CEO Shulman added: “Our partnership with Warner Music unlocks a bigger, richer Suno experience for music lovers, and accelerates our mission to change the place of music in the world by making it more valuable to billions of people. Together, we can enhance how music is made, consumed, experienced and shared. This means we’ll be rolling out new, more robust features for creation, opportunities to collaborate and interact with some of the most talented musicians in the world, all while continuing to build the biggest music ecosystem possible.”

Rooker was happy to reprise the fan-favorite character of Mike Harper.

The motion capture and technology have significantly improved since Black Ops 2.

Rooker was surprised by the lasting impact of the Mike Harper character.

Michael Rooker is back as Mike Harper in Call of Duty: Black Ops 7, and he had plenty to say about his return to the iconic video game franchise.

The cat’s out of the bag: Mike Harper survived the events of Call of Duty: Black Ops 2, meaning the choice to save him was the correct one, allowing him and his wisecracking self to be a part of JSOC and help Desmond Mason take down the guild, Black Ops 7 reality warping campaign.

HHW Gaming got to speak with Michael Rooker about making his return to the iconic video game franchise, and basically, he’s more than happy to be back.

Michael Rooker Says It Was A Great Choice To Bring Mike Harper Back

Activision / Treyarch / Call of Duty: Black Ops 7

Love Hip-Hop Wired? Get more! Join the Hip-Hop Wired Newsletter

We care about your data. See our privacy policy.

The first question immediately was how the game’s developers pitched the idea of Harper’s return in Black Ops 7 to him, and how they made the choice not to shoot Harper in Black Ops 2 the canon decision in the game.

“Oh, come on. Great choice. Mike Harper, fan favorite,” Rooker begins. “That whole concept of making that choice, that moral choice, to shoot Mike Harper, or not shoot Mike Harper. Shoot Mike Harper and save the world. Or hem the world. I’m not shooting my buddy. And that was a big choice for people, for gamers, and it’s a big deal. And so, that really, I think, embedded that character even more in folks’ minds. So now that Mike Harper is back in Black Ops 7, it’s just, oh, yeah. Oh, yeah. It’s about time. I know that’s what they’re going to be thinking, because that’s what I thought. I was like, “Yeah, well, it’s about time. Why not?”

Rooker Was Ready To Be A Part of The Design Process

Activision / Sledgehammer Games / Trey Arch / High Moon Studios / Call of Duty: Black Ops 7

Black Ops 2 was released more than a decade ago, meaning the design process for video games has, for all intents and purposes, gotten more sophisticated.

We spoke with Rooker about taking part in the process for Black Ops 7, and he revealed it’s a bit of a mix of new and old, but

“It was a big difference in the facial motion capture. We have cameras now that record the motion capture for the face, the eyes, the mouth, and all that stuff,” Rooker revealed to HHW Gaming.”Technically, it’s going to be way more detail. They’ve got the making of this game; the chips are different. All the internal stuff that they’re using to put everything together, to make the hair, was a big thing back then. The hair is a big thing. So they’ve gotten way better with that, and it’s just phenomenal stuff. And the motion capture is basically the same. The main thing for me was that it threw me for a loop for about five minutes, until I got used to having this camera in my face and the lights shining in my eyes. Once you’re over that, then it’s basically, yeah, I can do this scene with someone.

He continued, “The ADR room is basically old, tried-and-true stuff. There’s not a lot of big… There may be a lot… There very well may be advances in the recording aspect of this stuff that I don’t know about. I’m not a professional recording artist, or anything like that, so I don’t know the technology that goes in behind that. But I know for a fact that my job inside the studio hasn’t really changed.”

“As an actor, I’m still there, and try to get in the moment, or back to that moment, and make it as real as possible. That’s my goal anyway.”

Rooker Was Suprised At The Lasting Impact of Mike Harper Since Black Ops 2

Activision / Sledgehammer Games / Trey Arch / High Moon Studios / Call of Duty: Black Ops 7

One thing that surprised the Guardians of the Galaxy and The Walking Dead star was how popular Mike Harper became after his appearance in Black Ops 2, which led the developers to bring him back.

“Oh, you know what? I was surprised back then, back in the day, how popular that character ended up being, ” Rooker revealed. “And they were always telling me, “Oh, this is going to be great. They’re going to love this. People are going to love this.” And they did. People absolutely, absolutely, dug it. And I didn’t really stay with games that much, I did that, but I moved on. I did film and stuff like that. And as you know, gamers and cinema people, kind of different animals.”

“But this is going to be really a good combination now, because we’re getting to a point in gaming, where it’s looking very cinematic, “he continued.

“And now it’s unreal that they’re going to make a film based on the biggest game franchise in the world. So it’s about time, because I’m like, “Yeah, do it, man. Get these gamers off the couch, and into the theater.”

Who knows, maybe Mike Harper will end up in the film adaptation as well; it only makes sense.

You can peep our entire interview, where we touched on other topics above as well. Call of Duty: Black Ops 7 is out now.

Trending on Billboard

iHeartRadio’s chief programming officer and president, Tom Poleman, sent a letter to staff on Friday (Nov. 21), obtained by Billboard, pledging that the company doesn’t and won’t “use AI-generated personalities” or “play AI music that features synthetic vocalists pretending to be human,” among other promises.

The pledge marks the beginning of iHeart’s new “Guaranteed Human” program, which will also see the company publish only “Guaranteed Human” podcasts, according to Poleman’s letter.

Related

Starting Monday (Nov. 24), “‘Guaranteed Human’ is a core part of our brand,” Poleman wrote. “You’ll hear it in our imaging, and we want listeners to feel it every time they tune in.” iHeartRadio DJs must now add a line to their hourly legal IDs about being “Guaranteed Human.”

“Remember, this isn’t a tagline — it’s a promise,” Poleman added. “And it’s part of every station’s personality.”

News of the pro-human content initiative comes after recent headlines about the growth — and increasing indistinguishability — of AI-generated voices, songs and podcasts. According to French streaming service Deezer, 97% of participants in a recent study could not tell the difference between AI and human-made songs; the platform also estimates that 50,000 full AI-generated songs are added to their service every day. Billboard also recently revealed that AI music company Suno is generating 7 million tracks a day.

Related

While most of these AI songs aren’t receiving many streams or sales, there have been several breakthroughs in recent weeks. This includes Xania Monet, an artist whose work was recorded using Suno and paired with AI images. Her song “How Was I Supposed to Know?” recently debuted on the Adult R&B Airplay chart and has been put in rotation by a handful of radio stations across the U.S.

In terms of radio DJs, an AI radio personality called DJ Tori has taken over the undesirable overnight and weekend shifts at a hard-rock radio station in Hiawatha, Iowa, called KFMW Rock 108, according to a Rolling Stone report. Her voice and image — that of a fashionable tattooed rocker — are both AI-generated. Meanwhile, will.i.am launched RAiDiO.FYI, an interactive AI radio app featuring synthetic voices that tell you about the songs that are playing. Spotify also continues to push its AI DJ feature, programmed based on the voice and persona of one of its employees.

In the world of podcasting, a company called Inception Point AI, founded by a former Wondery executive, has more than 5,000 podcasts and is generating 3,000 episodes a week at a cost of $1 or less per episode.

To underscore his point to iHeart employees, Poleman added some stats, including that “70% of consumers say they use AI as a tool, yet 90% want their media to be from real humans,” and that “92% say nothing can replace human connection — up from 76% in 2016.”

Read the full letter below.

Related

Team,

A few weeks ago, I shared that iHeart is one of the last truly human entertainment sources and our listeners come to us for companionship, connection, and authenticity — something AI can’t replicate. We’re Guaranteed Human. We don’t use AI-generated personalities. We don’t play AI music that features synthetic vocalists pretending to be human. And the podcasts we publish are also Guaranteed Human.

Thank you for leaning into our commitment to keep everything we do real and authentic. That’s what makes us special. And now we’re taking it a step further.

Starting Monday, 11/24, we’re making “Guaranteed Human” a core part of our brand. You’ll hear it in our imaging, and we want listeners to feel it every time they tune in. Here’s how:

Hourly Legal IDs:

We’re changing hourly legal IDs beginning Monday to say:

“(Station call letters/name), (city of license) an iHeartRadio station… Guaranteed Human” (with the iHeartRadio audio signature heartbeat).

These will run every hour on our stations.

Sweepers

To augment our legal IDs, we also want you to create fun, nonchalant sweepers that fit your station’s vibe and reinforce that we’re Guaranteed Human. Sweepers should end with the words “Guaranteed Human.” Drop them in every hour, between songs or talk content where it feels natural. Many stations will also start these on Monday, with others ramping up in the following weeks.

Attached are examples of the hourly legal ID and sweepers.

Remember, this isn’t a tagline — it’s a promise. And it’s part of every station’s personality. When listeners interact with us, they know they’re connecting with real voices, real stories, and real emotion. That’s our superpower.

A little about why this matters so much; research says:

70% of consumers say they use AI as a tool, yet 90% want their media to be from real humans.

92% use social media, but 2/3 say it makes them feel worse and more disconnected.

92% say nothing can replace human connection — up from 76% in 2016.

9 in 10 say human trust can’t be replicated with AI.

To be clear, we do encourage the use of AI powered productivity and distribution tools that help scale our business operations – such as scheduling, audience insights, data analysis, workflow automation, show prep, editing and organization. Those tools help us reach more people efficiently, while preserving the human creativity and authenticity that define our brand.

We talk to our listeners constantly, and they tell us they are also using AI as a tool, but they tell us there’s a limit:

3/4 expect AI will complicate their lives in the next year and beyond.

82% are worried about the impact AI will have on society.

2/3 are worried about losing their jobs to AI.

And, funny enough, 2/3 even fear that AI could someday go to war with humans.

The bottom line is our research tells us that 96% of consumers think “Guaranteed Human” content is appealing. So, we’re leaning in.

Thank you for keeping it real and making “Guaranteed Human” something that our audience hears and feels every day.

Sometimes you have to pick a side — we’re on the side of humans.

Tom

Elsa / About This Account

In news that shouldn’t surprise a soul, it turns out those MAGA troll accounts on X, formerly Twitter, are not even based in the United States.

Before Elon Musk reluctantly purchased Twitter and stupidly changed the name to X, it wasn’t a secret that the social media platform was a hotbed for foreign influence campaigns meddling in American politics.

Russian troll farms, for example, were the focus of numerous credible reports and targeted by the United States government.

After X rolled out a new feature called “About This Account,” it became even clearer that trolls are busier than ever on the platform and are probably making money from the political madness going on between Democrats and MAGA Republicans.

Per The Verge:

Love Hip-Hop Wired? Get more! Join the Hip-Hop Wired Newsletter

We care about your data. See our privacy policy.

Almost immediately after the feature launched, people started noticing that many rage-bait accounts focused on US politics appeared to be based outside of the US. Profiles with names like ULTRAMAGA🇺🇸TRUMP🇺🇸2028 were revealed to be based in Nigeria. A verified account posing as border czar Tom Homan was traced to Eastern Europe. And America_First0? Apparently from Bangladesh. An entire network of “Trump-supporting independent women” claiming to be from America was really located in Thailand.

Social Media Began Sniffing Out Sketchy Accounts

It didn’t take long for users on the platform to start sniffing out other pro-MAGA accounts and exposing them as foreign influencers.

Of course, the right-wing influencers are doing the same by pulling up left-leaning/progressive accounts as foreign agents. The back-and-forth is only adding to the political vitriol currently in this country.

Hilariously, as soon as X rolled out the feature, they promptly pulled it back, noting that locations could be inaccurate due to travel, VPNs, and proxies.

While that could be true, it’s also unlikely to be the case for so many accounts.

You can see more reactions to this eye-opening development below.

Trending on Billboard

Every two weeks, users on the AI music platform Suno create as much music as what is currently available on Spotify, according to Suno investor presentation materials obtained by Billboard. Those users are primarily male, aged 25-34, and spend an average of 20 minutes creating the some 7 million songs produced on the platform daily, according to the documents and additional sources.

Related

Suno sees a future where, as it expands its offerings, creators and listeners will not need to leave the app to create, stream or share their music socially. The goal is listed as creating “high-value, high-intent music discovery” and “artist-fan interaction.”

Now, Suno might have the money to fulfill that ambitious vision. Suno announced last week that it had raised $250 million in a C fundraise, led by Menlo Ventures, with additional investors including NVIDIA’s venture capital arm NVentures and Hallwood Media. The round brings Suno’s valuation up to $2.45 billion.

“In just two years, we’ve seen millions of people make their ideas a reality through Suno, from first-time creators to top songwriters and producers integrating the tool into their daily workflows,” Suno CEO Mikey Shulman said in a statement about the company’s new influx of cash. “This funding allows us to keep expanding what’s possible, empowering more artists to experiment, collaborate and build on their creativity. We’re proud to be at the forefront of this historic moment for music.”

The investment materials, obtained by Billboard, provide more insight into the company’s aspirations for what Shulman calls the “future of music” which he is “seeing … take shape in real time.” In the materials, Suno claims that by 2028 the company will grow to $1 billion in revenue, and “this is before we consider monetizing consumption.” Eventually, the company says, the Suno of “tomorrow … will power the new, bigger music ecosystem and will be a $500 billion company.” Reps for Suno did not respond to multiple requests for comment.

According to the pitch deck, this funding round will be allocated to the following uses: 30% computing power, 20% mergers and acquisitions, 20% discovery, 20% marketing, 15% data and 5% partnerships. Already, the company has shown an interest in growing and expanding its offerings via acquisition, having purchased digital audio workstation WavTool in June 2025.

Related

Notably, compute power — the hardware, processors, memory, storage and energy that operate data centers — is the company’s biggest expense since Jan. 2024, which is a common situation for many AI companies. “Amid the AI boom, compute power is emerging as one of this decade’s most critical resources … there is an unquenchable need for more,” a McKinsey study said about the cost of compute in the age of AI.

OpenAI’s AI video and social platform, Sora, for example, especially struggles against high compute costs due to the complexities of generating video. According to an estimate by Forbes, Sora costs the company as much as $15 million per day to run. According to Suno’s financials, reviewed by Billboard, Suno has spent over $32 million on training its model since January 2024. That breaks down to $32 million spent on compute power and $2,000 spent on data costs — meaning the cost of content, like music, which it uses to train its model.

Suno is currently in the middle of a number of lawsuits concerning the data on which it trains its model. This includes two class action copyright infringement lawsuits, filed by indie musician groups; one lawsuit from Danish rights group Koda; and one from German collection society GEMA. Most significantly, however, is the copyright infringement lawsuit filed by the three major music companies, Sony, Universal and Warner, against Suno for $500 million, claiming widespread copyright infringement of their sound recordings “at an almost unimaginable scale” to train its model.

In recent months, there have been reports that Suno — and Udio, which was also sued in a near-identical lawsuit by the majors — has been in licensing and settlement talks with the majors. At least part of that reporting proved to be true: recently, Udio settled with both Universal and Warner and created a license structure for their recorded music and publishing interests. (Sony’s lawsuit against the company is still ongoing). Now, to work with the music companies, Udio is pivoting its service to become more of a fan engagement platform where users can play with participating songs in a “walled garden,” meaning users cannot download and post the creations on streaming services.

Related

Suno’s pitch deck does not say anything about its lawsuits with copyright holders. It also does not discuss any plan for licensing musical content.

Suno sold investors on its vision to create a service where you can “create, listen and inspire” on the platform, “turn[ing] music from passive consumption into an active participatory culture.” To expand this vision, Suno plans to roll out more products like a voice beautification filter and a social media service. It also touts that its work has cultural power outside of consumption on its own service, including a photo of cover art from viral AI band The Velvet Sundown next to the text, “Suno songs go viral off platform.”

The deck shows an image of a user playing guitar in a TikTok-like vertical social media video. In the top right corner, above typical social media buttons (“Like” “comment” and share”) is a button that says “Create Hook,” implying that users will connect through iterating and remixing each other’s creations in a video, social-forward way.

The company’s vision for the future, however, hinges on customer acquisition and reducing the number of users who leave the service after joining. According to the deck, Suno says it has 1 million subscribers already, up 300% year over year, and it currently says approximately 25% of subscribers remain after 30 days. On a weekly basis, Suno says it has 78% retention for subscribers and 39% weekly retention for all users.

Suno’s investment materials say that one positive indicator is that the company is “reactivating” an increasing number of users, meaning these users came back after one month. As of July 2025, Suno claims 350,000 reactivated users per week. The company attributes this to things like new features, increased awareness and improvements in their model.

Additional reporting by Elizabeth Dilts Marshall.

SOPA Images / Trump Mobile

We all knew Trump Mobile was the jig when it was first announced, and one tech writer for The Verge learned that likely remains to be the case.

Allison Johnson, a senior reviewer for The Verge, shared her experience after signing up for Trump Mobile, the Donald Trump and his family’s latest grift.

Johnson reports she ordered a Trump Mobile SIM card to test the service, since the website can’t seem to lock down a device for testing.

Love Hip-Hop Wired? Get more! Join the Hip-Hop Wired Newsletter

We care about your data. See our privacy policy.

That doesn’t come as a surprise because we previously reported that Spigen, a mobile accessory company, threatened to sue Trump Mobile after it was caught using photoshopped images of a gold-plated Samsung S25 Ultra with a T logo slapped on the back of a Spigen phone case.

Anyway, Johnson detailed her attempt to secure a SIM card, and that also was a failure becuase it failed to show up even after the company said it would be shipping via next business day via First Class USPS mail.

Per The Verge:

Let’s say I don’t fully trust the Trump Organization to be great stewards of my credit card information, so I used a virtual number provided by my bank. Once I’d handed over the virtual money, I got this message: “Thank you for your order of a Physical SIM, we’ll ship next business day via First Class USPS mail, no separate tracking number will be sent.” Just what I was looking for with my wireless service: a sense of mystery! Fast-forward two weeks, and that SIM card is still on its way.

The Extremely Nice Customer Service Kept Her From Feeling Salty

Johnson’s experience with Trump Mobile wasn’t all bad, thanks to the extremely pleasant customer service she received.

A shocker coming from a Trump-family-owned company.

Anyway, two weeks passed, and, no surprise, she didn’t receive her SIM card, so she called customer service, and they agreed she should have received it already.

Later that afternoon, Johnson received an email from [email protected] informing her that her SIM card would be shipped via two-day FedEx.

The shipping company emailed her to confirm she would be receiving her SIM card from Liberty Mobile, the MVNO behind Trump Mobile.

The representative from Trump Mobile customer service named “Kh,” reached out to her to inform her that she would be receiving a refund and that her package would be “arriving soon.”

She did get her refund the same day, but the wait for the SIM card is still ongoing, and Johnson claims it could arrive any day.

We shall see.

Trending on Billboard

KLAY has signed AI licensing deals with the three major music companies — Universal Music Group (UMG), Sony Music and Warner Music Group (WMG) — on both the recorded music and publishing sides of their businesses.

Little is known about KLAY, which is set to launch “in the coming months,” according to a press release, but one source close to the deal tells Billboard the company will be a subscription-based interactive streaming service where users can manipulate music. In 2024, the company made its first licensing announcement with UMG, but back then, the service was described as a “Large Music Model,” dubbed “KLayMM,” which would “help humans create new music with the help of AI.”

Related

Since its inception, KLAY has stressed its interest in being a partner to the music industry, providing ethical solutions in the AI age, rather than an adversary. As its press release states, “KLAY is not a prompt-based meme generation engine designed to supplant human artists. Rather, it is an entirely new subscription product that will uplift great artists and celebrate their craft. Within KLAY’s system, fans can mold their musical journeys in new ways while ensuring participating artists and songwriters are properly recognized and rewarded.”

The company was founded by Ary Attie (a musician and now CEO), and Thomas Hesse (former president of global digital business and U.S. sales and distribution at Sony Music and now KLAY’s chief content and commercial officer). The company’s top ranks also include Björn Winckler (chief AI officer; former leader of Google DeepMind’s music initiatives), and Brian Whitman (chief technology officer; former principal scientist at Spotify and founder of The Echo Nest).

“Technology is shaped by the people behind it and the people who use it. At KLAY, from the beginning, we set out to earn the trust of the artists and songwriters whose work makes all of this possible,” said Attie in a statement. “We will continue to operate with those values, bringing together a growing community to reimagine how music can be shared, enjoyed, and valued. Our goal is simple: to help people experience more of the music they love, in ways that were never possible before — while helping create new value for artists and songwriters. Music is human at its core. Its future must be too.”

Related

Michael Nash, executive vp and chief digital officer at UMG, said: “We are very pleased to have concluded a commercial license with KLAYVision, following up on our industry-first strategic collaboration framework agreement announced one year ago. The supportive role we played with the capable and diversified management team of Ary, Thomas, Björn and Brian in the development of their product and business model extends our long-standing commitment to entrepreneurial innovation in the digital music ecosystem. We’re excited about their transformational vision and applaud their commitment to ethicality in Generative AI music, which has been a key foundation of our partnership with them from the very start of their journey.”

Dennis Kooker, president of global digital business at Sony Music, said: “We are pleased to partner with KLAY Vision to collaborate on new generative AI products. While this is a beginning, we want to work with companies that understand that proper licenses are needed from rightsholders to build next-generation AI music experiences.”

Carletta Higginson, executive vp and chief digital officer at WMG, said: “Our goal is always to support and elevate the creativity of our artists and songwriters, while fiercely protecting their rights and works. From day one, KLAY has taken the right approach to the rapidly-evolving AI universe by creating a holistic platform that both expands artistic possibilities and preserves the value of music. We appreciate the KLAY team’s work in advancing this technology and guiding these important agreements.”

Trending on Billboard

Imogen Heap has always been known as an innovator in the music industry. The British singer, songwriter, producer and technologist has been experimenting with cutting edge tools to push her creativity forward since she first began releasing music over 25 years ago.

Now, as AI music continues to make headlines in the music industry and infiltrates the songwriting process, Heap is working on ethical ways to incorporate it into her own work. Recently, she released the song “I AM___,” a 13-minute epic that featured a collaboration between Heap and AI.Mogen, her self-trained AI voice model, and by collaborating with her digital self, Heap forces listeners to consider big questions, like the nature of art and self-identity.

Related

She’s also working on a company called Auracles, which recently announced a partnership with SoundCloud designed to create a verified digital ID for musicians that, in the age of AI, helps them track their music’s uses across the internet, grant permissions for approved uses of their work and create “the missing foundational data layer for music.”

To talk through how she’s using AI in a responsible and creative way as well as the 20th anniversary of her seminal album Speak For Yourself, Heap joined Billboard‘s new music industry podcast, On the Record w/ Kristin Robinson, this week.

Below is an excerpt of that conversation.

Watch or listen to the full episode of On the Record on YouTube, Spotify or Apple Podcasts here, or watch it below.

You’ve been watching this space for a long time, but when do you feel like you noticed a shift when everyone else started paying attention?

I feel like the silver lining, really, we definitely are at that place where it’s very confusing right now, and we do need some clarity, but we are, we can do it. There are tools, and everybody wants it. So I feel like the silver lining, really, of this dark cloud that’s on my seat as AI music taking over, is that we are going to get the data layer of works, we’re going to get this complete data layer of works because people will want to prove not only that they’re human, but they want to go there. They want to actually say, “No, I’m human and these are all my works that I actually contributed to.”

Hallwood Media is the first music company to be open about signing so-called AI artists like Xania Monet and imoliver. Do you think that major labels will start signing AI talent, or talent that uses AI very heavily, in the next few years?

I think a lot of things when you say that. I feel a lot of major labels are signing music that sounds AI generated to me anyway, it’s just like, ‘oh, that just sounds like that last thing and that last thing’ — nothing’s changed. So, it wouldn’t surprise me. I thought that that would happen, and wherever there’s money, obviously they’ll go if they think they can make money out of this artist.

Related

You recently released a 13-minute song called “I AM__” which featured you singing alongside AI.Mogen, which is essentially your own AI voice model. Why did you decide to collaborate with yourself, and what were you trying to say artistically?

It’s kind of a very long winded, kind of silly way to do things, but I did it as a statement. I did it to rile people up, I suppose, and just be able to have this conversation. Because the song, in the beginning, it takes you through this journey of what I’ve experienced over the last four years where I started to think about, ‘Who am I? What am I? And this ego, what is it?

And then I started to think about AI. Because what is AI? What can I feel that AI can’t feel? The noise section in that song is like the annihilation of my ego —I’m not saying I have no ego now — But then, after I needed a section for after care. I wanted to explore this idea that AI is our child. AI is something that we are raising together, as the as the mother and father. Right at the end, there’s the voice of AI.mogen. I wanted it to be an AI voice, even though I had to sing everything. The way I made this is I sang all the parts, and then I put it through my AI model so that the model of my voice is then singing the words. It’s like changing the sound on a on keyboard.

Yeah, so like a voice filter over your own performance, but this filter is also you in a way?

Yeah, and I wanted to trick people. After this quite traumatic noise section, they would feel something, and that voice would not be my voice would be the AI agent’s voice. I wanted to create a discussion. I wanted to, you know, show people that we already don’t know the difference between AI and human, but does it matter? I do say in the title, it’s AI.mogen, but it was all ethically sourced. It was all done in the best way possible. And it’s my own voice, and I didn’t use any, I didn’t generate any music.

Of course, some people already canceled me for, you know, even saying that AI is in my music. So many people have said: ‘You use AI to generate the song?’ I was like, ‘No, I did not. I wish I could, because it took me four years to do it with 100s of hours,’ but the point of this is everybody is fearful of it, but we can still feel. And what is art? Art something for someone, not to somebody else, but if a sense of it, it speaks to you and it makes you feel something does it matter if it makes you recognize something in yourself? I mean, essentially, or AI is generated from human.

Related

I love that you’ve been able to make this model that is totally within your control. I think the thing that gets scary is when anyone can create another person’s voice model online. I think such an important part of being an artist is having the taste and the curation to know what you want to say and don’t say. In an age of AI, it feels like you’re losing a lot of control over yourself. Do you have fears around that?

I mean, we already have lost control. People basically, you know, say that we’ve written something when we haven’t, and they don’t credit us when we have been a part of it. But that’s still very much less. I do think it’s gonna, really, it’s just gonna, it’s gonna force us into creating something that will make sense of what we have already and for the future, so that we can put a flag in the sand as humans and go, okay, up to this point, it was human generated. Again, I think it just comes down to this core missing piece that we don’t have which is an ID layer, like, identifying home for each individual.

AI almost completely lowers the barrier to entry for making music. As a trained musician, I’m wondering what your thoughts are on that?

Why not? Anyone who has spent 10,000 hours perfecting your craft will always have an edge. If you generate anything off these services right now, you’re just going to sound like 99.99% of other people who did that too, but if you have an edge, if you have a real something there that connects with people, you use these models differently. But, yeah, this helps everyone move forward. I don’t have problem with that at all.

State Champ Radio

State Champ Radio