AI

Page: 5

Last month, Vice President J.D. Vance represented the U.S. at the Artificial Intelligence Action Summit in Paris. In a speech addressing top leaders from around the world, he declared, “I think our response [to AI] is to be too self-conscious, too risk-averse, but never have I encountered a breakthrough in tech that so clearly calls us to do precisely the opposite. […] We believe excessive regulation of the AI sector could kill a transformative industry just as it’s taking off.”

Vance’s comments marked a stark shift from the Biden administration, which often spoke about weighing AI’s “profound possibilities” with its “risks,” as the former president put it in his farewell address in January. In the wake of Vance’s remarks in Paris, it’s clear that in the Trump White House, AI safety is out and the race for dominance is in. What does that mean for the music business and its quest to protect copyrights and publicity rights in the AI age?

“All the focus is on the competition with China, so national security has become the number one issue with AI in the Trump administration,” says Mitch Glazier, CEO/president of the Recording Industry Association of America. “But for our industry, it’s interesting. The [Trump administration] does seem to be saying at the same time that we also need to be ‘America First’ with our [intellectual property] too. It’s both ‘America First’ for IP and ‘America First’ for AI.”

Trending on Billboard

That, Glazier thinks, provides an opportunity for the music business to continue to push its AI agenda in D.C. While the president does not have the remit to make alterations to copyright protection in the U.S., the Trump administration still has powerful sway with the Republican-dominated legislative branch, where the RIAA, the Recording Academy and others have been fighting to get new protections for music on the books. Glazier says there’s been no change in strategy there — it’s still full steam ahead, trying to get those bills passed into law in 2025.

Top copyright attorney Jacqueline Charlesworth, partner at Frankfurt Kurnit Klein & Selz, still fears that Vance’s speech — as well as President Trump’s inauguration in January, where he was flanked by top executives from Apple, Meta, Amazon and Alphabet — “reflected a lot of influence from the large tech platforms.” Many major tech companies have taken the position that training their AI models on copyrights does not require consent, credit or compensation. “My concern is that creators and copyright owners will be casualties in the AI race,” she says.

For David Israelite, president/CEO of the National Music Publishers’ Association, it’s still too early to totally understand the new administration’s views on copyright and AI. But, he says, “we are concerned when the language is about rushing to train these models — and that becoming a more important principle than how they are trained.”

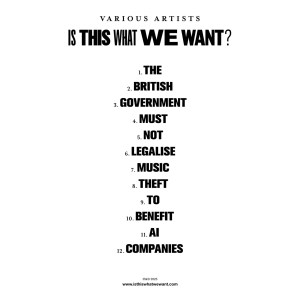

Glazier holds out hope that Trump’s bullish approach to trade agreements with other nations could benefit American copyright owners and may influence trade partners to honor U.S. copyrights. Specifically, he points to the U.K., where the government has recently proposed granting AI companies unrestricted access to copyrighted material for training their models unless the rights holder manually opts out. Widely despised by copyright holders of all kinds, the music industry has protested the opt-out proposal in recent weeks through op-eds in national newspapers, comments to the U.K. government and through a silent album, Is This What We Want?, co-authored by a thousand U.K. artists, including Kate Bush, Damon Albarn and Hans Zimmer.

Organized by AI developer, musician and founder of AI safety non-profit Fairly Trained, Ed Newton-Rex, Is This What We Want? features silent tracks recorded in famous studios around London to demonstrate the potential consequences of not protecting copyrighted songs. “The artists and the industry in the U.K. have done an incredible job,” says Glazier. “If for some reason the U.K. does impose this opt-out, which we think is totally unworkable, then this administration may have an opportunity to apply pressure because of a renewal of trade negotiations.”

Israelite agrees. “Much of the intellectual property fueling these AI models is American,” he says. “The U.S. tackles copyright issues all the time in trade agreements, so we are always looking into that angle of it.”

It’s not just American music industry trade groups that have been following the Trump administration’s approach to AI. Abbas Lightwalla, director of global legal policy for the International Federation of the Phonographic Industry (IFPI), the global organization representing the interests of the recorded music business, says he and his colleagues followed Vance’s Paris speech “with great interest,” and that future trade agreements between the U.S. and other nations are “absolutely on the radar,” given that IFPI advocates across the world for the music industry’s interests in trade negotiations. “It’s crucial to us that copyright is protected in every market,” he says. “It’s a cross-border issue… If the U.S. is doing the same, then I think that’s a benefit to every culture everywhere to be honest.”

Charlesworth says this struggle is nothing new; the music industry has dealt with challenges to copyright protection for decades. “In reflecting on this, I feel like, starting in the ‘90s and 2000s, the tech business had this ‘take now, pay later’ mentality to copyright. Now, it feels like it’s turned into ‘take now, and see if you can get away with it.’ It’s not even pay later.”

As the AI race continues to pick up at a rapid pace, Israelite says he’s “not that hopeful that we are going to see any kind of government action quickly that would give us guidance” — so he’s also watching the active lawsuits surrounding AI training and copyright closely and looking to the commercial space for businesses in AI and IP that are voluntarily working out solutions together. “We’re very involved and focused on partnerships with AI that can help pave the way for how this technology provides new revenue opportunities for music, not just threats,” he says.

Glazier says he’s working in the commercial marketplace, too. “We have 60 licensing agreements in place right now between AI companies and music companies,” he says. Meanwhile, the RIAA is still watching the two lawsuits it spearheaded for the three major music companies against AI music startups Suno and Udio and is working to get bills like the NO FAKES Act and NO AI FRAUD Act passed into law.

“While IP wasn’t on the radar in Vance’s speech, the aftermath of it totally shifted the conversation,” says Glazier. “We just have to keep working to protect copyrights.”

Celine Dion is speaking out against artificial intelligence-generated music that is using her likeness.

The legendary vocalist took to Instagram on Friday (March 7) to share a statement, writing, “It has come to our attention that unsanctioned, AI-generated music purporting to contain Celine Dion’s musical performances, and name and likeness, is currently circulating online and across various Digital Service Providers.”

The statement continued, “Please be advised that these recordings are fake and not approved, and are not songs from her official discography.”

Explore

Explore

See latest videos, charts and news

See latest videos, charts and news

However, Dion did not indicate exactly which AI-generated songs or performances have been circulating. See her post here.

Dion recently made her long-awaited return to the stage last year, performing Édith Piaf’s 1950 classic “Hymne à L’Amour” on the Eiffel Tower at the 2024 Paris Olympics opening ceremony in July. The event came after she cancelled the dates on her North American Courage world tour before revealing she is fighting Stiff Person Syndrome, a rare neurological disorder that causes severe muscle spasms. Accompanied by a piano and rocking a stunning white gown, the singer delivered her effortlessly flawless vocals as she belted the lyrics to the song, which translates to “The Hymn of Love.”

Trending on Billboard

“I haven’t fought the illness; it’s still within me and will be forever,” she told Vogue France early last year, noting that she follows athletic, physical and vocal therapy five times a week. “Hopefully, we’ll find a miracle, a way to heal through scientific research, but I have to learn to live with it. I work on everything, from my toes to my knees, calves, fingers, singing, voice… It’s the condition I have to learn to live with now, by stopping questioning myself.”

She concluded, “There is one thing that will never stop, and that’s the desire. It’s the passion. It’s the dream. It’s the determination.”

Vermillio, an AI licensing and protection platform, raised $16 million in Series A funding led by Sony Music and DNS Capital, the company announced on Monday (March 3). This marks the first time Sony Music has invested in an AI music company.

Sony Music’s relationship with Vermillio dates back to 2023, when the two companies collaborated on a project for The Orb and David Gilmour. Through the partnership, fans could use Vermillio’s proprietary AI tech to create personalized remixes of the acts’ 2010 ambient album Metallic Spheres.

According to a press release, Vermillio plans to use the funds to scale operations and “continue building out solutions for a generative AI internet that enables talent, studios, record labels, and more to protect and monetize their content.”

Trending on Billboard

Vermillio’s goal is to create an AI platform that securely licenses intellectual property (IP). One of its core products is TraceID, which provides protection and third-party attribution for artists. Through it, the company claims artists and rights holders can control their data and AI rights.

Apart from its collaboration with Sony Music for the AI remix project, Vermillio has also worked with top talent agency WME to shield its clients from IP theft and find opportunities to monetize their name, image and likeness rights by licensing their data. Sony Pictures also worked with Vermillio to create an AI engine that allowed fans to make their own unique digital avatars in the style of Spider-Verse animation. Each of the fan generations were then tracked using TraceID so that all works could be tied back to the filmmakers’ original IP.

“We are setting a new standard for AI licensing — one that proactively enables consent, credit, and compensation for innovative opportunities,” said Dan Neely, co-founder/CEO of Vermillio, in a statement. “With the support of an innovation leader like Sony Music, Vermillio will continue building our products that ensure generative AI is utilized ethically and securely. At this critical moment in determining the future of AI and how to hold platforms accountable, we are proud to protect the world’s most beloved content and talent.”

“Sony Music is focused on developing responsible generative AI use cases that enhance the creativity and goals of our talent, protect their work, excite fans, and create new commercial possibilities,” added Dennis Kooker, president of global digital business at Sony Music Entertainment. “Dan Neely and the team at Vermillio share our vision that prioritizing proper consent, clear attribution and appropriate compensation for professional creators is foundational to unlocking monetization opportunities in this space. We look forward to expanding our successful collaboration with them as we work to support the growth of trusted platforms by enabling secure AI solutions that are mutually beneficial for technology innovators, artists and rightsholders.”

Amazon has partnered with AI music company Suno for a new integration with its voice assistant Alexa, allowing users to generate AI songs on command using voice prompts. This is part of a much larger rollout of new features for a “next generation” Alexa, dubbed Alexa+, powered by AI technology.

“Using Alexa’s integration with Suno, you can turn simple, creative requests into complete songs, including vocals, lyrics, and instrumentation. Looking to delight your partner with a personalized song for their birthday based on their love of cats, or surprise your kid by creating a rap using their favorite cartoon characters? Alexa+ has you covered,” says an Amazon blog post, posted Wednesday (Feb. 26).

Other new Alexa+ features include new voice filters, image generation, smart home operation, Uber booking and more. It also includes an integration with Ticketmaster to “find you the best tickets to an upcoming basketball game or to the concert you’ve been dying to go to,” according to the blog post.

Trending on Billboard

Suno is known to be one of the most powerful AI music models on the market, able to generate realistic lyrics, vocals and instrumentals at the click of a button. However, the company has come under scrutiny by the music business establishment for its training practices. Spearheaded by the RIAA, Universal Music Group, Sony Music and Warner Music Group came together last summer to sue Suno and its rival Udio, accusing the AI music company of copyright infringement “on an almost unimaginable scale.” At the time, neither AI company had admitted to training on copyrighted material.

In a later filing, Suno admitted that “it is no secret that the tens of millions of recordings that Suno’s model was trained on presumably included recordings whose rights are owned by the Plaintiffs in this case.” Its CEO, Mikey Shulman, added in a blog post that same day, “We see this as early but promising progress. Major record labels see this vision as a threat to their business. Each and every time there’s been innovation in music… the record labels have attempted to limit progress,” adding that Suno felt the lawsuit was “fundamentally flawed” and that “learning is not infringing.”

More recently, German collection society GEMA also took legal action against Suno in a case filed Jan. 21 in Munich Regional Court.

Still, a couple of music makers have sided with Suno. In October, Timbaland was announced as a strategic advisor for the AI music company, assisting in “creative direction” and “day-to-day product development.” Electronic artist and entrepreneur 3LAU has also been named as an advisor to the company.

News of Amazon’s deal with Suno comes just months after its streaming service, Amazon Music, was commended by the National Music Publishers’ Association for finding a way to add audiobooks to its “Unlimited” subscription tier in the U.S. without “decreas[ing] revenue for songwriters” — a contrast to Spotify, which decreased payments to U.S. publishers by about 40% when it added audiobooks to its premium tier.

Kate Bush, Damon Albarn, Annie Lennox and Hans Zimmer are among the artists who have contributed to a new “silent” album to protest the U.K. government’s stance on artificial intelligence (AI).

The record, titled Is This What We Want?, is “co-written” by more than 1,000 musicians and features recordings of empty studios and performance spaces. In an accompanying statement, the use of silence is said to represent “the impact on artists’ and music professionals’ livelihoods that is expected if the government does not change course.”

The record was organized by Ed Newton-Rex, the founder of Fairly Trained, a non-profit that certifies generative AI companies that respect creators’ rights. The tracklisting to the 12-track LP reads: “The British government must not legalise music theft to benefit AI companies.”

Is This What We Want? is now available on all major streaming platforms.

Also credited as co-writers are performers and songwriters from across the industry, including Billy Ocean, Ed O’Brien, Dan Smith (Bastille), The Clash, Mystery Jets, Jamiroquai, Imogen Heap, Yusuf / Cat Stevens, Riz Ahmed, Tori Amos, James MacMillan and Max Richter. The full list of musicians involved with the record can be viewed at the LP’s official website. All proceeds from the album will be donated to the charity Help Musicians.

Courtesy Photo

The release comes at the close of the British government’s 10-week consultation on how copyrighted content, including music, can lawfully be used by developers to train generative AI models. Initially, the government proposed a data mining exception to copyright law, meaning that AI developers could use copyrighted songs for AI training in instances where artists have not “opted out” of their work being included.

The government report said the “opt out” approach gives rightsholders a greater ability to control and license the use of their content, but it has proved controversial with creators and copyright holders. In March 2024, the 27-nation European Union passed the Artificial Intelligence Act, which requires transparency and accountability from AI developers about training methods and is viewed as more creator-friendly.

Speaking at the beginning of the consultation, Lisa Nandy, the U.K.’s Secretary of State for Culture, Media and Sport, said in a statement: “This government firmly believes that our musicians, writers, artists and other creatives should have the ability to know and control how their content is used by AI firms and be able to seek licensing deals and fair payment. Achieving this, and ensuring legal certainty, will help our creative and AI sectors grow and innovate together in partnership.”

Industry body UK Music said in its most recent report that the music U.K. scene contributed £7.6 billion ($9.6 billion) to the country’s economy, while exports reached £4.6 billion ($5.8 billion).

“The government’s proposal would hand the life’s work of the country’s musicians to AI companies, for free, letting those companies exploit musicians’ work to outcompete them,” said Newton-Rex in a statement on the album release. “It is a plan that would not only be disastrous for musicians, but that is totally unnecessary: the UK can be leaders in AI without throwing our world-leading creative industries under the bus. This album shows that, however the government tries to justify it, musicians themselves are united in their thorough condemnation of this ill-thought-through plan.”

Jo Twist, CEO of the British Phonographic Institution (BPI), added, “The UK’s gold-standard copyright framework is central to the global success of our creative industries. We understand AI’s potential to drive change including greater productivity or improvements to public services, but it is entirely possible to realise this without destroying our status as a creative superpower.”

Speaking to Billboard U.K. in January, alt-pop star Imogen Heap — a co-writer on Is This What We Want? — expanded on her approach to AI. “The thing which makes me nervous is the provenance; there’s all this amazing video, art and poetry being generated by AI as well as music, but you know, creators need to be credited and they need to tell us where they’re training [the data] from.”

MashApp, a music remixing app featuring hit songs from Doja Cat, Ed Sheeran, Britney Spears and more, launched in the Apple app store Tuesday (Feb. 18). At launch, the AI-powered app has already worked out licenses for select tracks from Universal Music Group (UMG) and Warner Music Group’s (WMG’s) publishing and recorded music catalogs, Sony Music’s recorded music catalog, and Kobalt’s publishing catalog.

The app, founded by former Spotify executive Ian Henderson, features a TikTok-like vertical feed for users to share the remixes they make with the app. Among the tools it offers to users, MashApp boasts the ability to combine and mix multiple songs into each other and to speed up, slow down or separate out a song into its individual stems (the individual instrument tracks in a master recording).

Trending on Billboard

News of MashApp’s launch arrives just days after Bloomberg reported that Spotify is planning to launch a superfan streaming tier that includes extra features, like high-fidelity audio and in-app remixing tools.

MashApp, however, is the latest standalone app to use cutting edge technology, like AI, to allow users to morph and manipulate their favorite songs. Last year, Hook, another AI remix app, announced its licensing deal with Downtown. Other companies, like Lifescore, Reactional Music and Minibeats, have also played with the idea of allowing users more control over the music they listen to in recent years. With these tools, fans can turn static recordings into dynamic works that evolve over time based on a listener’s situation, whether that’s having the music respond to actions in a video game in real time or while driving a car. Even Ye (formerly Kanye West) played with this concept during the rollout of his album Donda 2, which was only available via a hardware device, called a Stem Player, that let listeners control the mix of the album.

MashApp is available for free or via a paid subscription for ad-free listening and additional features. Though mashups and remixes of hit songs are popular soundtracks for short-form content on TikTok and Instagram Reels, MashApp creations must be enjoyed within the app and are only available for personal use.

“MashApp’s mission is to bring the joy of playing with music creation to non-musicians, to let people play with their favorite music, as they have long done through DJing, mix tapes, mashups, and karaoke,” explained MashApp CEO/founder Henderson in a statement. “We want this new creative play to be a great experience for fans, but also for artists. This requires close partnerships with record labels and music publishers, and we’re excited that our partners have embraced our vision.”

Mark Piibe, executive vp of global business development & digital strategy at Sony Music, added: “We are pleased to be working with MashApp to help fans go deeper in how they engage with their favorite music through a new personalization and creation experience that appropriately values the work of our artists. This partnership furthers Sony Music’s ongoing commitment to supporting innovation in the marketplace by collaborating with developers of quality products that see opportunity in solutions that respect the rights of professional creators.”

“UMG always seeks to support innovation in the digital music ecosystem. MashApp introduces another evolution of the streaming experience for users by combining the creativity of DJ apps, with the accessibility that streaming offers,” said Nadir Contractor, senior vp of digital strategy & business development at UMG. “Within MashApp, users can unlock their own creative expression to curate, play and enjoy in real-time musical mashups from their favorite artists and songs, while respecting and supporting artist rights.”

“Our commitment to championing the rights of our artists and songwriters is at the core of everything we do,” said John Rees, senior vp of strategy & business development at WMG. “This partnership with MashApp builds on this mission–delivering a licensed, innovative platform that not only offers fans an exciting way to engage with music but also safeguards the work of the artists and songwriters who make it all possible.”

Lastly, Bob Bruderman, chief digital officer at Kobalt Music, added, “Kobalt has been a strong supporter of new companies that allow fans to express their creativity and engage with music they love. It was immediately clear that MashApp had a unique vision that opened a new experience for music fans on a well-executed platform, simultaneously respecting copyright. We look forward to a long partnership with MashApp.”

This analysis is part of Billboard’s music technology newsletter Machine Learnings. Sign up for Machine Learnings, and other Billboard newsletters for free here.

Have you heard about our lord and savior, Shrimp Jesus?

Last year, a viral photo of Jesus made out of shrimp went viral on Facebook — and while it might seem obvious to you and me that generative AI was behind this bizarre combination, plenty of boomers still thought it was real.

Trending on Billboard

Bizarre AI images like these have become part of an exponentially growing problem on social media sites, where they are rarely labeled as AI and are so eye grabbing that they draw the attention of users, and the algorithm along with them. That means less time and space for the posts from friends, family and human creators that you want to see on your feed. Of course, AI makes some valuable creations, too, but let’s be honest, how many images of crustacean-encrusted Jesus are really necessary?

This has led to a term called the “Dead Internet Theory” — the idea that AI-generated material will eventually flood the internet so thoroughly that nothing human can be found. And guess what? The same so-called “AI Slop” phenomenon is growing fast in the music business, too, as quickly-generated AI songs flood DSPs. (Dead Streamer Theory? Ha. Ha.) According to CISAC and PMP, this could put 24% of music creators’ revenues at risk by 2028 — so it seems like the right time for streaming services to create policies around AI material. But exactly how they should take action remains unclear.

In January, French streaming service Deezer took its first step toward a solution by launching an AI detection tool that will flag whatever it deems fully AI generated, tag it as such and remove it from algorithmic recommendations. Surprisingly, the company claims the tool found that about 10% of the tracks uploaded to its service every day are fully AI generated.

I thought Deezer’s announcement sounded like a great solution: AI music can remain for those who want to listen to it, can still earn royalties, but won’t be pushed in users’ faces, giving human-made content a little head start. I wondered why other companies hadn’t also followed suit. After speaking to multiple AI experts, however, it seems many of today’s AI detection tools generally still leave something to be desired. “There’s a lot of false positives,” one AI expert, who has tested out a variety of detectors on the market, says.

The fear for some streamers is that a bad AI detection tool could open up the possibility of human-made songs getting accidentally caught up in a whirlwind of AI issues, and become a huge headache for the staff who would have to review the inevitable complaints from users. And really, when you get down to it, how can the naked ear definitively tell the difference between human-generated and AI-generated music?

This is not to say that Deezer’s proprietary AI music detector isn’t great — it sounds like a step in the right direction — but the newness and skepticism that surrounds this AI detection technology is clearly a reason why other streaming services have been reluctant to try it themselves.

Still, protecting against the negative use-cases of AI music, like spamming, streaming fraud and deepfaking, are a focus for many streaming services today, even though almost all of the policies in place to date are not specific to AI.

It’s also too soon to tell what the appetite is for AI music. As long as the song is good, will it really matter where it came from? It’s possible this is a moment that we’ll look back on with a laugh. Maybe future generations won’t discriminate between fully AI, partially AI or fully human works. A good song is a good song.

But we aren’t there yet. The US Copyright Office just issued a new directive affirming that fully AI generated works are ineligible for copyright protection. For streaming services, this technically means, like all other public domain works, that the service doesn’t need to pay royalties on it. But so far, most platforms have continued to just pay out on anything that’s up on the site — copyright protected or not.

Except for SoundCloud, a platform that’s always marched to the beat of its own drum. It has a policy which “prohibit[s] the monetization of songs and content that are exclusively generated through AI, encouraging creators to use AI as a tool rather than a replacement of human creation,” a company spokesperson says.

In general, most streaming services do not have specific policies, but Spotify, YouTube Music and others have implemented procedures for users to report impersonations of likenesses and voices, a major risk posed by (but not unique to) AI. This closely resembles the method for requesting a takedown on the grounds of copyright infringement — but it has limits.

Takedowns for copyright infringement are required by law, but some streamers voluntarily offer rights holders takedowns for the impersonation of one’s voice or likeness. To date, there is still no federal protection for these so-called “publicity rights,” so platforms are largely doing these takedowns as a show of goodwill.

YouTube Music has focused more than perhaps any other streaming service on curbing deepfake impersonations. According to a company blog post, YouTube has developed “new synthetic-singing identification technology within Content ID that will allow partners to automatically detect and manage AI-generated content on YouTube that simulates their singing voices,” adding another layer of defense for rights holders who are already kept busy policing their own copyrights across the internet.

Another concern with the proliferation of AI music on streaming services is that it can enable streaming fraud. In September, federal prosecutors indicted a North Carolina musician for allegedly using AI to create “hundreds of thousands” of songs and then using the AI tracks to earn more than $10 million in fraudulent streaming royalties. By spreading out fake streams over a large number of tracks, quickly made by AI, fraudsters can more easily evade detection.

Spotify is working on that. Whether the songs are AI or human-made, the streamer now has gates to prevent spamming the platform with massive amounts of uploads. It’s not AI-specific, but it’s a policy that impacts the bad actors who use AI for this purpose.

SoundCloud also has a solution: The service believes its fan-powered royalties system also reduces fraud. “Fan-powered royalties tie royalties directly to the contributions made by real listeners,” a company blog post reads. “Fan-powered royalties are attributable only to listeners’ subscription revenue and ads consumed, then distributed among only the artists listeners streamed that month. No pooled royalties means bots have little influence, which leads to more money being paid out on legitimate fan activity.” Again, not AI-specific, but it will have an impact on AI uploaders with bad motives.

So, what’s next? Continuing to develop better AI detection and attribution tools, anticipating future issues with AI — like AI agents employed for streaming fraud operations — and fighting for better publicity rights protections. It’s a thorny situation, and we haven’t even gotten into the philosophical debate of defining the line between fully AI generated and partially AI generated songs. But one thing is certain — this will continue to pose challenges to the streaming status quo for years to come.

In case you missed it: Suno has picked up another lawsuit against it.

Before you read any further, go to this link and listen to one or two of the songs to which GEMA licenses rights and compare them to the songs created by the generative music AI software Suno. (You may not know the songs, but you’ll get the idea either way.) They are among the works over which GEMA, the German PRO, is suing Suno. And while those examples are selected to make a point, based on significant testing of AI prompts, the similarities are remarkable.

Suno has never said whether it trained its AI software on copyrighted works, but the obvious similarities seem to suggest that it did. (Suno did not respond to a request for comment.) What are the odds that artificial intelligence would independently come up with “Mambo No. 5,” as opposed to No. 4 or No. 6, plus refer to little bits of “Monica in my life” and “Erica by my side?”

“We were surprised how obvious it was,” GEMA CEO Tobias Holzmüller tells Billboard, referring to the music Suno generated. “So we’re using the output as evidence that the original works were elements of the training data set.” That’s only part of the case: GEMA is also suing over the similarities between the AI-created songs and the originals. (While songs created entirely by AI cannot be copyrighted, they can infringe on existing works.) “If a person would claim to have written these [songs that Suno output], he would immediately be sued, and that’s what’s happening here.”

Trending on Billboard

Although the RIAA is also suing Suno, as well as Udio, this is the biggest case that involves compositions, as opposed to recordings — and it could set a precedent for the European Union. (U.S. PROs would not have the same standing to sue, since they hold different rights.) It will proceed differently from the RIAA case, which involves higher damages, and of course different laws, so Holzmüller explained the case to Billboard — as well as how it could unfold and what’s at stake. “We just want our members to be compensated,” Holzmüller says, “and we want to make sure that what comes out of the model is not blatantly plagiarizing works they have written.”

When did you start thinking about bringing a case like this?

We got the idea the moment that services like Suno and Udio hit the market and we saw how easy it was to generate music and how similar some of it sounds. Then it took us about six months to prepare the case and gather the evidence.

Your legal complaint is not yet public, so can you explain what you are suing over?

The case is based on two kinds of copyright infringement. Obviously, one is the training of the AI model on the material that our members write and the processing operations when generating output. There are a ton of legal questions about that, but I think we will be able to demonstrate without any reasonable doubt that if the output songs are so similar [to original songs] it’s unlikely that the model has not been trained on them. The other side is the output. Those songs are so close to preexisting songs, that it would constitute copyright infringement.

What’s the most important legal issue on the input side?

The text and data-mining exception in the Directive [on Copyright in the Digital Single Market, from 2019]. There is some controversy over whether this exception was intended to allow the training of AI models. Assuming that it was, it allows rights holders to opt out, and we opted out our entire membership. There could also be time and territoriality issues [in terms of where and when the original works were copied].

How does this work in terms of rights and jurisdiction?

On the basis of our membership agreement, we hold rights for reproduction and communication to the public, and in particular for use for AI purposes. As far as jurisdiction, if the infringement takes place in a given territory, you can sue there — you just have to serve the complaint in the country where the infringing company is domiciled. As a U.S. company, if you’re violating copyright in the EU, you are subject to EU jurisdiction.

In the U.S., these cases can come with statutory damages, which can run to $150,000 per work infringed in cases of willful infringement. Is there an amount you’re asking for in this complaint?

We want to stake out the principle and stop this type of infringement. There could be statutory damages, but the level has to be calculated, and there are different standards to do that, at a later stage [in the case].

Our longterm goal is to establish a system where AI companies that train their models on our members’ works seek a license from us and our members can participate in the revenues that they create. We published a licensing model earlier this year and we have had conversations with other services in the market that we want to license, but as long as there are unlicensed services, it’s hard for them to compete. This is about creating a level playing field

How have other rightsholders reacted to this case?

Nothing but support, and a lot of questions about how we did it. Especially in the indie community, there’s a sense that we can only discuss sustainable licenses if we stand up against unauthorized use.

The AI-created works you posted online as examples are extremely similar to well-known songs to which you hold rights. But I assume those didn’t come up automatically. How much did you have to experiment with different prompts to get those results?

We tried different songs, and we tried the same songs a few times and it turned out that for some songs it was a similar outcome every time and for other songs the difference in output was much greater.

These results are much more similar to the original works than what the RIAA found for its lawsuits against Suno and Udio, and I assume the lawyers on those cases worked very hard. Do you think the algorithms work differently in Germany or for German compositions?

I don’t know. We were surprised ourselves. Only a person who can explain how the model works would be able to answer that.

Tell me a bit about the model license you mentioned.

We think a sustainable license has two pillars. Rightsholders should be compensated for the use of their works in training and building a model. And when an AI creates output that competes with input [original works], a license needs to ensure that original rightsholders receive a fair share of whatever value is generated.

But how would you go about attributing the revenue from AI-created works to creators? It’s hard to tell how much an AI relies on any given work when it creates a new one.

Attribution is one of the big questions. My personal view is that we may never be able to attribute the output to specific works that have been input, so distribution can only be done by proxy or by funding ways to allow the next generation of songwriters to develop in those genres. And we think PROs should be part of the picture when we talk about licensing solutions.

What’s the next step in this case procedurally?

It will take some time until the complaint is served [to Suno in the U.S.], and then the defendant will appoint an attorney in Munich, the parties will exchange briefs, and there will be an oral hearing late this year or early next year. Potentially, once there is a decision in the regional court, it could go [to the higher court, roughly equivalent to a U.S. appellate court]. It could even go to the highest civil court or, if matters of European rights are concerned, even to the European Court of Justice [in Luxembourg].

That sounds like it’s going to take a while. Are you concerned that the legal process moves so much slower than technology?

I wish we had a quicker process to clarify these legal issues, but that shouldn’t stop us. It would be very unfortunate if this race for AI would trigger a race to the bottom in terms of protection of content for training.

A new federal report on artificial intelligence says that merely prompting a computer to write a song isn’t enough to secure a copyright on the resulting track — but that using AI as a “brainstorming tool” or to assist in a recording studio would be fair game.

In a long-awaited report issued Wednesday (Jan. 29), the U.S. Copyright Office reiterated the agency’s basic stance on legal protections for AI-generated works: That only human authors are eligible for copyrights, but that material created with the assistance of AI can qualify on a case-by-case basis.

Amid the surging growth of AI technology over the past two years, the question of copyright coverage for outputs has loomed large for the nascent industry, since works that aren’t protected by copyrights would be far harder for their creators to monetize.

Trending on Billboard

“Where that [human] creativity is expressed through the use of AI systems, it continues to enjoy protection,” said Shira Perlmutter, Register of Copyrights, in the report. “Extending protection to material whose expressive elements are determined by a machine, however, would undermine rather than further the constitutional goals of copyright.”

Simply using a written prompt to order an AI model to spit out an entire song or other work would fail that test, the Copyright Office said. The report directly quoted from a comment submitted by Universal Music Group, which likened that scenario to “someone who tells a musician friend to ‘write me a pretty love song in a major key’ and then falsely claims co-ownership.”

“Prompts alone do not provide sufficient human control to make users of an AI system the authors of the output,” the agency wrote. “Prompts essentially function as instructions that convey unprotectible ideas.”

But the agency also made clear that using AI to help create new works would not automatically void copyright protection — and that when AI “functions as an assistive tool” that helps a person express themselves, the final output would “in many circumstances” still be protected.

“There is an important distinction between using AI as a tool to assist in the creation of works and using AI as a stand-in for human creativity,” the Office wrote.

To make that point, the report cited specific examples that would likely be fair game, including Hollywood studios using AI-powered tech to “de-age” actors in movies. The report also said AI could be used as a “brainstorming tool,” quoting from a Recording Academy submission that said artists are currently using AI to “assist them in creating new music.”

“In these cases, the user appears to be prompting a generative AI system and referencing, but not incorporating, the output in the development of her own work of authorship,” the agency wrote. “Using AI in this way should not affect the copyrightability of the resulting human-authored work.”

Wednesday’s report, like previous statements from the Copyright Office on AI, offered broad guidance but avoided hard-and-fast rules. Songs and other works that use AI will require “case-by-case determinations,” the agency said, as to whether they “reflect sufficient human contribution” to merit copyright protection. The exact legal framework for deciding such cases was not laid out in the report.

The new study on copyrightability is the second of three studies the agency is conducting on AI. The first report, issued last year, recommended federal legislation banning the use of AI to create fake replicas of real people; bills that would do so are pending before Congress.

The final report, set for release at some point in the future, deals with the biggest AI legal question of all: whether AI companies break the law when they “train” their models on vast quantities of copyrighted works. That question — which could implicate trillions of dollars in damages and exert a profound effect on future AI development — is already the subject of widespread litigation.

Deezer, a leading French streaming service, says that roughly 10,000 fully AI-generated tracks are being delivered to the platform every day, equating to about 10% of its daily music delivery.

This finding emerged from an AI detection tool Deezer developed and filed two patent applications for in December, the company says. Now, the service is developing a tagging system for the fully AI-generated works it detects in order to remove them from its algorithmic and editorial recommendations and boost human-made music.

According to a press release, Deezer’s new tool can detect AI-made music from several popular AI music models, including Suno and Udio, with plans to further expand its capabilities. The company notes that the tool could eventually be trained to detect from “practically any other similar [AI model]” as long as Deezer can gain access to samples from those models — though the company also says it has “made significant progress in creating a system with increased generalizability, to detect AI generated content without a specific dataset to train on.” It has additional plans to develop the capability to identify deepfaked voices.

Trending on Billboard

Deezer is the first music streaming platform to announce the launch of an AI detection tool and the first to seek a concrete solution for the growing pool of AI music accumulating on streaming platforms worldwide. Given that AI-generated music can be made much more quickly than human-created works, critics have expressed concern that these AI tracks will increasingly take away money and editorial opportunities from human artists on streaming services.

Among those critics are the three major music companies — Universal Music Group, Sony Music Entertainment and Warner Music Group — which collectively sued top AI music models Suno and Udio for copyright infringement last summer. In their complaint, the three companies said Suno and Udio could generate music that would “saturate the market with machine-generated content that will directly compete with, cheapen and ultimately drown out the genuine sound recordings on which [the services were] built.”

“As artificial intelligence continues to increasingly disrupt the music ecosystem, with a growing amount of AI content flooding streaming platforms like Deezer, we are proud to have developed a cutting-edge tool that will increase transparency for creators and fans alike,” says Alexis Lanternier, CEO of Deezer. “Generative AI has the potential to positively impact music creation and consumption, but its use must be guided by responsibility and care in order to safeguard the rights and revenues of artists and songwriters. Going forward we aim to develop a tagging system for fully AI generated content, and exclude it from algorithmic and editorial recommendation.“

“We set out to create the best AI detection tool on the market, and we have made incredible progress in just one year,” says Aurelien Herault, Deezer’s chief innovation officer. “Tools that are on the market today can be highly effective as long as they are trained on data sets from a specific generative AI model, but the detection rate drastically decreases as soon as the tool is subjected to a new model or new data. We have addressed this challenge and created a tool that is significantly more robust and applicable to multiple models.”

State Champ Radio

State Champ Radio